Benchmarks

Pages: 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18

|

|

Chris Hall. The zeropain output you are getting from LanMan98 will affect your benchmarks. I got a 1MB zp file when compiling the ROM and it added about 90secs to the compilation. |

|

|

Interesting that the vastly superior Memory speed compared to the ARMX6 doesn’t translate to better RAMFS performance. Yeah, making the pages cacheable would help. But we’d also want to improve the way that cache maintenance is handled within the OS – whenever a cacheable page is being unmapped (or moved elsewhere) the kernel flushes that page from the cache. This was a requirement for older ARMs (where the cache only held the virtual address of the page), but with modern ARMs (ARMv6+) the caches generally work with physical addresses, so the only time you need to flush a page from the cache is when you’re changing the cacheability attribute of the underlying physical page. If we can teach the OS about that, then it would allow us to have a cacheable RAM disc without incurring any extra performance penalty in the PMP side of things (where it keeps remapping pages to allow the RAM disc to only occupy a small logical window). Getting rid of the cache flushes when unmapping pages would also help make the Wimp a bit snappier. |

|

|

Benchmarks updated for the Titanium board, see here. |

|

|

As you have used all of these machines I wondered if you had any thoughts on compiling speed in real use. Your benchmarks to do a ROM unpack and compile is not an everyday process – once you compile the rom, recompiling is a lot quicker so a slow first unpack and compile is not really a problem What I am interested in is the time taken to compile ROM that has already been compiled from a hard disc. My Iyonix for example recompiles the Pi roms with just the make,install and join rom options ticked in 130secs. Presumably this is related to the FS Read speed from hard disc in which case it’ not worth buying a new machine as the improvement over an Iyonix isn’t great. |

|

|

I’m not sure what the answer is (I’m yet to move away from using my Iyonix as my main machine, despite intending to do so for several years). But I’d expect the “HD read” metric to be more indicative of performance than the “FS read” metric. There are lots of places where block reads will be used (loading amu 2×100 times, loading the 100 modules to link the final ROM). I’d also assume that amu and the compiler use block reads for any source files they access (remember that they would have been optimised for running on Archimedes machines) – although it’s possible they’re just relying on clib’s default FILE* buffer settings (4k buffer size – not great for modern machines, but enough to cut down on some of the overheads) |

|

|

I think block reads (using OS_GBPB) are measured by FS read whereas LOAD and SAVE are measured by HD Read/Write. |

|

|

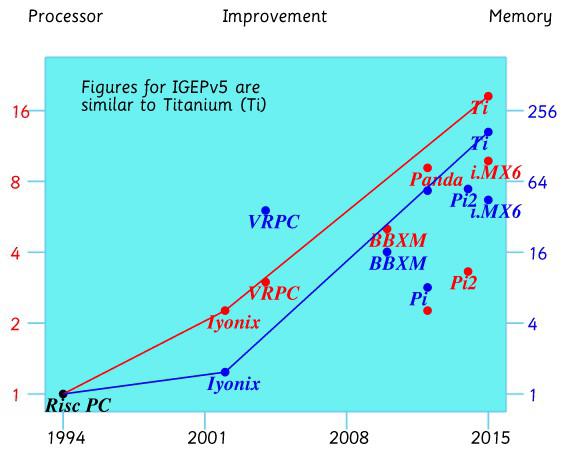

Review of Titanium updated, with extra photos showing the new keyboard. Also included is a review of both the ARMX6 and the IGEPv5 here – click the ‘ARMX6’, ‘IGEPv5’ or ‘Titanium’ tabs for the reviews. Raw benchmarks do not always tell the full story but making due alowance for this, the performance gains over the last 20 years still look quite impressive:

|

|

|

IGEPv5 still does SATA via USB? I thought Chris Evans announced at the London show that direct SATA support was completed. |

|

|

There are still issues with ADFS/SATA, being worked on. Not yet working reliably. This only affects the IGEPv5. |

|

|

We were demonstrating a beta version.

Piccolo systems tell me it is not only the IGEPv5 that has the problem. One of the difficulties is that it is intermittent, fortunately a way has been found to comparatively reliably demonstrate the problem, it may also help point to the cause. Hopefully it will be fixed soon. |

|

|

One of the difficulties is that it is intermittent, fortunately a way has been found to comparatively reliably demonstrate the problem, it may also help point to the cause. Can you be more specific please? With certain combinations I can get ‘drive not ready’ (08) errors on my computer at a small number of nearby disc addresses (and it won’t let me reformat it, same error 08). If I reset the computer, however, the errors go away again and I can reboot, compile a rom etc. with no errors. I think the problem was due to a rather ambitious HardDisc image so I have reverted to a vanilla daily build HD4 image. I am gradually adding apps and things as the error does not present on a plain HardDisc4 image… Benchmarks for Titanium updated – some oddities as one or two are very slow (icon plotting on 32k colours, only chose 32k colours because the MDF I am using at the monent gives pink patches at 1920×1200 at 16M and the screen breaks up on screen writes and scrolls but is fine at 32k). At 32k the icon plotting benchmark is 145% at 16M it is 1991%) particularly ShareFS HD write. |

|

|

Speaking of oddities, any idea why Rect Copy is so slow on the ARMX6? |

|

|

It is data corruption when reading from the SATA drive, the data on the drive has never corrupted but programs reading settings or data are sometimes reporting as being corrupt (retrying nearly always works). This doesn’t sound like the same problem. The corruption is in 64 byte blocks which tends to suggest it is not the actual SATA driver directly as it deals in a minimum of 1K blocks of data. We’re wondering if the incredible speed of the I’m using the RapidO Ig as my main machine for DataPower and Browsing, by copying my data files from the SATA drive to RAM disc on booting it works without errors

Which computer are you meaning? |

|

|

Bit strange of you to bring Mpro up in your post there, Chris. It’s running happily up to 600Mb/sec on my RISCube SSD without any problems, and I’ve only heard of disc related issues (dead/faulty/dying drives aside, obviously) occasionally on A9home and RO 3.7 with the >2Gb drive bug (adfsbuffers). AFAIK we’ve been happily running SATA on ARMX6 this last year and a majority of users run Mpro. However, as I emphasised in my talks/presentations, ARMX6 was always optimised first and foremost for stability, due to the commercial needs that allowed it to happen – can’t have database or email servers corrupting data or locking/freezing etc. If there was ever a choice between speed or stability, John chose the latter, although fortunately he usually managed ending up with both. |

|

|

Which computer are you meaning? Titanium. But the errors seem to have stopped: they were occurring when I had three things connected to the SATA sockets on the PCB: an SSD boot drive, a lead with a long data cable not connected to anything (designed for a second plug-in SSD) and a DVD drive (which I haven’t got working yet). Connecting the second drive seemed to coincide with the intermittent ‘not ready’ errors stopping but I’m still testing. As the errors were intermittent (but once they started they were frequent), didn’t involve corruption of the drive and were ‘cured’ by a reset, it is diffficult to be sure they’ve gone! The corruption is in 64 byte blocks At the end of the 1k block? Could it be memory paging being slightly premature on borderline occasions? Does it go if you turn off lazy task swapping (e.g. add debug code)? Err! Now just gone past my level of understanding! SATA speed tests we’ve done on the RapidO Ig are significantly faster than on any other RISC OS hardware. I’m getting 176Mbyte/s read speed on the Titanium with a Crucial MX200 250GB SSD rated at 550Mbyte/s maximum read speed and using a 6Gb/s data lead (the other leads are ‘ordinary’ 3Gb/s data leads). |

|

|

Probably not related, but with NFS client/server (Sunfish/Moonfish), under certain circumstances, there were some corruption at the end of files. Not sure if it’s related to network or file systems. |

|

|

Sorry Andrew I wasn’t meaning to cast any aspersions on the excellent MPro. I’m sure that none of the problems people have had are down to MPro but is is one of the few programs on RISC OS that is disc intensive so was using it as an example of what can show up problems within the OS. |

|

|

Leave the debug code in and call it fixed. |

|

|

Programming by witchcraft? If it is a DDE application that works in debug mode but fails (randomly) in real life, check you aren’t using a pointer before it has been initialised. Debug sanitises variables and such, where real life does not. Also consider calloc() instead of malloc()… |

|

|

Leave the debug code in and call it fixed. Debugging by witchcraft is more likely – timing-specific issues can be a right pain to try and get to the bottom of. But I’m sure Ben is well-versed in the dark arts. All we can do is hope that it’s a software bug (64 bytes sounds suspiciously like it could be related to cache line size – suggesting a cache/ARMop issue) and not a hardware bug (e.g. SATA controller saying that the transfer has finished but it hasn’t finished writing the data back to memory yet). |

|

|

Leave the debug code in and call it fixed. One problem with that is that a side effect of including debug code is that lazy task swapping is disabled which slows things down. AIUI. |

|

|

Isn’t that what most debugging amounts to? |

|

|

Debugging is the process of discovering and removing bugs from programs, therefore programming is the process of putting the bugs there in the first place. ;-} |

|

|

Is the corruption at the start/end of the DiscOp, randomly throughout the DiscOp or on a page boundary start/end? You can test the latter by performing a DiscOp across allocated and unallocated pages, and compare the result. Does the new ADFS flush the cache for a DiscOp range? Is the source available? It could also be an ARMv7 deprecation or errata causing the issue, has the compiled code been checked for these? |

|

|

Has there been any benchmarks for USB3? |

Pages: 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18